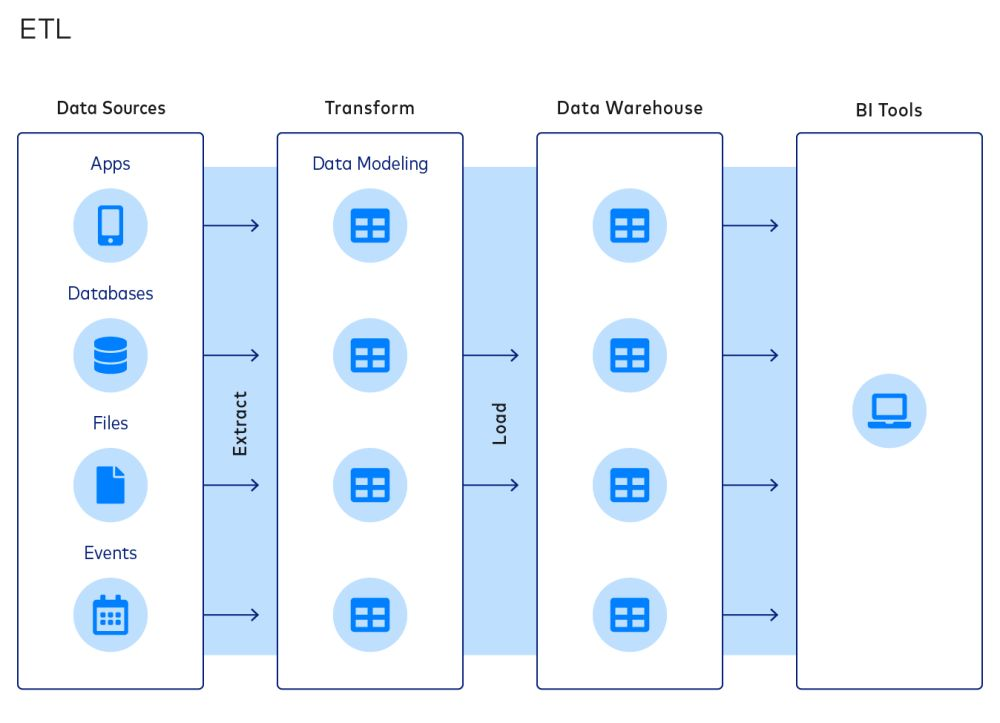

The ETL procedure makes sure that the information is consistent, trusted as well as in the appropriate format for further handling. Currently, we have taken typical data for building ML designs. For some variables, missing out on values are filled with absolutely nos like month_since_earliest_cr_line, acc_now_delinq, total_acc, pub_rec, open_acc, inq_last_6mnths, delinq_2years.

A Complete Guide to Data Transformation - Spiceworks News and Insights

A Complete Guide to Data Transformation.

Posted: Mon, 17 Oct 2022 07:00:00 GMT [source]

The implementers can rotate up new data as well as logical assets or do maintenance on existing properties without introducing "innovative" (non-standard) information into these vital parts. Despite where the information stays (on-premises, in the cloud, in a relational database or not), these sets of data remain the exact same, making their application so much less complicated by all. Search for and also pick the most effective industrial or open-source ETL, database monitoring, and Data Quality automation test devices that support the modern technologies made use of in your ETL task. The choice to carry out automated tools for ETL testing depends on a budget plan that supports extra spending to meet sophisticated screening requirements.

Change Explained

And also, these devices possess sophisticated capacities such as information profiling and information cleaning. The next step is to change this data to make it uniform by using a collection of service guidelines (like gathering, signs up with, sort, union features etc). However, these early solutions needed manual initiative in terms of Data extraction services writing scripts that would additionally have to be regularly readjusted for various information resources. Talend is a full data combination platform that increases the power as well as value of information. It incorporates, cleanses, regulates and supplies the right information to the appropriate customers.

Figure 5 stands for the Detailed guide to developing the semantic network. One independent variable is represented by several dummy variables. If none of them are statistically substantial, those variables need to be eliminated. If one or a couple of dummy variables stand for an independent variable, after that all dummy variables corresponding to that independent variable are kept.

Data Integration Vs Business Intelligence: A Comparison - Dataconomy

Data Integration Vs Business Intelligence: A Comparison.

Posted: Tue, 21 Feb 2023 08:00:00 GMT [source]

ETL testing automation devices need to offer durable safety functions, and also ETL test procedures should be developed with safety and security as well as compliance in mind. Automated ETL processes must be designed to deal with mistakes beautifully. If a mistake happens throughout removal, makeover, or loading, the procedure requires to be able to recover without shedding data or triggering downstream concerns. In a large enterprise, going into or fetching information manually is just one of the discomfort points in large business. The hands-on transfer of huge amounts of data between various sources as well as information warehouses exposes an ineffective, error-prone, as well as challenging procedure. As an example, an international companysuffered from USD 900 million monetary loss as a result of a human gap in Web scraping service providers the hand-operated entrance of lending repayments.

Use Of Etl Devices?

Initially, we require to make a decision the minimal rating as well as optimum score. Each monitoring falls into just one dummy classification of each initial independent variable. The maximum credit reliability evaluation can receive from the PD version when a borrower comes under the classification of initial independent variables with the highest design coefficients. Similarly, the minimal credit reliability is gotten to when a consumer comes under the category with the most affordable version coefficients for all variables. Analyze capacity is exceptionally vital for the PD model as it is called for by regulators.

- Automation assists to enhance workflows and also to far better satisfy the schema of the target data storage facility.

- However, there are a number of difficulties for ETL, which you need to be familiar with as well as take required actions to minimize.

- ETL normally summarizes information to minimize its size and also improve efficiency for details types of analysis.

- This guards data against defective reasoning, failed lots, or operational processes that are not filled to the system.

- 1970s when businesses began dealing with data processor computer systems to store transactional information from across their procedures.

Actually, the exact same resource can execute all the data assimilation steps without any handoffs. This makes the adoption of a dexterous methodology not just feasible yet compelling. ETL( i.e., extract, transform, tons) tasks are frequently without automatic testing. ETL devices give a selection of improvement functions that permit users to specify data change regulations and also processes without the need for custom-made coding. This can include de-duplication, date layout conversion, area combining, etc.

Making use of a listing of test scenarios such as this is a good begin on your method to the execution of ETL test automation. Unlike batchscheduling, ETL automation uses a rule-based plan for the discovery and removal of exceptions. Without a hands-on initiative from personnel, it automatically prevents escalations and records errors. On the other hand, the data transfer process remains to run with no disruptions or hold-ups. Whether automated or otherwise, data collectionand entrance mistakes are inevitable.

Organizations can either pick to go for Paid or Complimentary Open-Source Data Replication devices. While paid devices usually have top quality support, current documents, and regular item updates to keep up with the modifications in the Custom web scraping services data sources and customer demands. Free Open-Source devices permit organizations to personalize the tool according to their demands. This tool likewise supplies a standard collection of commands to cleanse and record your data.